목표

- 진짜 같은 손글씨 이미지를 생성하는 GAN model 만들기

- 16개 이미지 중에 8개 이상이 인식가능한 숫자를 포함하고 있으면 된다.

필요한 라이브러리 import

import tensorflow as tf

import glob

import imageio

import matplotlib.pyplot as plt

import numpy as np

import os

import PIL

from tensorflow.keras import layers

import time

MNIST dataset 로드

(train_images, train_labels), (_, _) = tf.keras.datasets.mnist.load_data()

train_images = train_images.reshape(train_images.shape[0], 28, 28, 1).astype('float32')

train_images = (train_images - 127.5) / 127.5

BUFFER_SIZE = 60000

BATCH_SIZE = 256

train_dataset = tf.data.Dataset.from_tensor_slices(train_images).shuffle(BUFFER_SIZE).batch(BATCH_SIZE)

훈련 이미지의 형태 변환 및 정규화

- 훈련 이미지를 단일 채널 차원(28x28x1)을 포함하도록 변환한다. MNIST 데이터셋은 grayscale 이므로 하나의 채널이 필요하다.

- (train_images - 127.5) / 127.5 를 통해 픽셀 값을 [0, 255] 범위에서 [-1, 1] 범위로 스케일하여 정규화한다.

TensorFlow 데이터셋 생성

- tf.data.Dataset.from_tensor_slices(train_images): 훈련 이미지의 numpy 배열을 tf.data.Dataset 객체로 변환한다.

- shuffle(BUFFER_SIZE): 데이터셋을 60,000 (훈련 이미지의 수) 크기의 버퍼로 셔플한다. 셔플링은 훈련 중 데이터가 잘 섞이도록 도와 모델 성능을 향상시킬 수 있다.

- batch(BATCH_SIZE): 데이터셋을 256 크기의 배치로 나눈다.

Generator model Architecture

다음과 같은 architecture를 지닌 Generator 모델을 만들 것이다.

크기가 100인 초기 노이즈로부터 최종 28x28x1 크기의 이미지를 생성하면 된다.

def make_generator_model():

model = tf.keras.Sequential()

model.add(layers.Dense(7*7*256, input_shape=(100,)))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Reshape((7, 7, 256)))

assert model.output_shape == (None, 7, 7, 256) # 모델이 7x7x256 크기의 출력을 내놓도록 검증

model.add(layers.Conv2DTranspose(

filters=128, # 필터 수

kernel_size=(5, 5), # 커널 크기

strides=(1, 1), # 스트라이드

padding='SAME', # 패딩 방식

use_bias=False

))

assert model.output_shape == (None, 7, 7, 128)

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(

filters=64, # 필터 수

kernel_size=(5, 5), # 커널 크기

strides=(2, 2), # 스트라이드

padding='SAME', # 패딩 방식

use_bias=False

))

assert model.output_shape == (None, 14, 14, 64)

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(

filters=1, # 필터 수

kernel_size=(5, 5), # 커널 크기

strides=(2, 2), # 스트라이드

padding='SAME', # 패딩 방식

))

return model

- Dense layer 의 input_shape 는 (행,열,채널) 순서대로 맞추어 넣어준다. 생성되는 noise 는 열크기가 100이므로 (100, ) 으로 표기해준다.

- n x n 크기의 Conv layer 를 적용하기 위해서는 Dense layer 를 거쳐 1차원 형태인 입력값을 n x n 이상으로 reshape 해주어야한다.

Dense layer 의 크기를 고려하여 적정한 7x7x256 로 reshape 해준다.

- Conv2DTranspose 레이어는 입력을 업샘플링하여 출력을 생성한다. 따라서 스트라이드 만큼 입력 크기가 배로 업샘플링되어 커널을 적용하여 출력이 생성된다.

ex) 7x7x128 인 입력 샘플을 2x2 strides 5x5x62 Conv layer 에 넣으면 스트라이드가 (2, 2)로 설정되어 있으므로 출력의 높이와 너비는 입력의 2배가 되어 결과는 14x14x62 가 된다.

Image generated from the untrained generator

위 모델을 통해 초기 노이즈로부터 랜덤한 이미지를 생성할 수 있다.

generator = make_generator_model()

noise = tf.random.normal([1, 100])

generated_image = generator(noise, training=False)

plt.imshow(generated_image[0, :, :, 0], cmap='gray')

(아직 훈련이 되지않은) 생성자를 이용해 이미지를 생성한 결과이다.

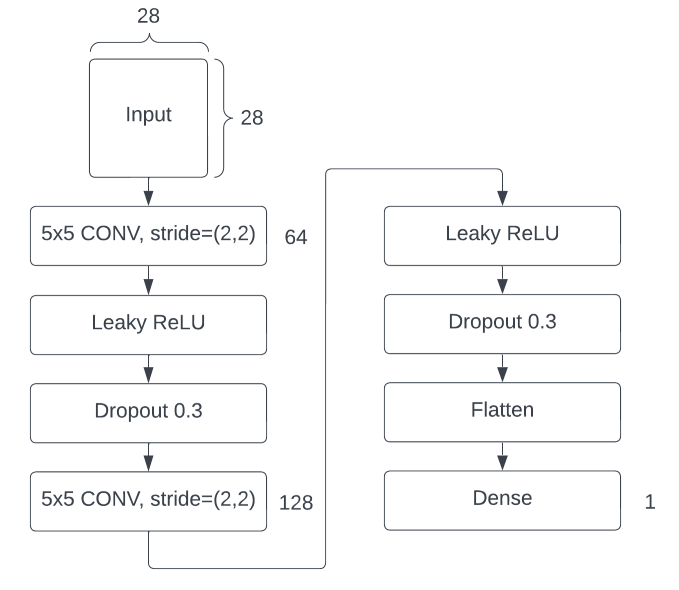

Discriminator model Architecture

다음과 같은 architecture를 지닌 Discriminator 모델을 만들 것이다.

Initial decision on the image

(아직까지 훈련이 되지 않은) 감별자를 사용하여, 생성된 이미지가 진짜인지 가짜인지 판별한다. 모델은 진짜 이미지에는 양수의 값 (positive values)을, 가짜 이미지에는 음수의 값 (negative values)을 출력하도록 훈련되어진다.

discriminator = make_discriminator_model()

decision = discriminator(generated_image)

print (decision)

# 결과 : tf.Tensor([[-0.00291351]], shape=(1, 1), dtype=float32)

손실함수와 옵티마이저 정의

이 메서드는 크로스 엔트로피 손실함수 (cross entropy loss)를 계산하기 위해 헬퍼 (helper) 함수를 반환한다.

cross_entropy = tf.keras.losses.BinaryCrossentropy(from_logits=True)

📌 크로스 엔트로피 손실함수란?

주로 분류 문제에서 사용되는 손실 함수 중 하나로 신경망이 예측한 확률 분포와 실제 레이블의 분포 간의 차이를 계산하여 모델을 학습시키는 데 사용된다.

q: 실제 레이블(0 또는 1)

p: 모델이 예측한 확률

예를 들어, 모델이 개를 예측할 확률이 0.8이고 실제로 개일 경우 q=1이라고 하자.

이 경우 크로스 엔트로피는 다음과 같이 계산된다.

Cross-Entropy(1,0.8)=−(1⋅log(0.8)+(1−1)⋅log(1−0.8))Cross-Entropy(1,0.8)

=−(1⋅log(0.8)+(1−1)⋅log(1−0.8))

=−(1⋅(−0.223)+0⋅(−1.609))

=−(−0.223)

=0.223

이 값은 모델의 예측이 실제 레이블과 얼마나 잘 일치하는지를 나타낸다.

이 값이 작을수록 모델의 예측이 실제와 가깝다고 볼 수 있다.

Discriminator의 loss function

real_output 은 discriminator가 진짜 이미지들에 대해 판별한 결과이다.

ex) [-1,1,-1,1]

fake_output 은 discriminator가 생성된 가짜 이미지들에 대해 판별한 결과이다.

ex) [0.3,-0.3,1,0.1]

이 두 결과 간의 차이를 구하기 위해서 크로스 엔트로피 손실함수 (cross entropy loss)를 이용해준다.

def discriminator_loss(real_output, fake_output):

real_loss = cross_entropy(tf.ones_like(real_output), real_output)

fake_loss = cross_entropy(tf.zeros_like(fake_output), fake_output)

total_loss = real_loss + fake_loss

return total_loss

이 메서드는 감별자가 가짜 이미지에서 얼마나 진짜 이미지를 잘 판별하는지 수치화한다. 진짜 이미지에 대한 감별자의 예측과 1로 이루어진 행렬을 비교하고,가짜 (생성된) 이미지에 대한 감별자의 예측과 0으로 이루어진 행렬을 비교한다. 1으로 이루어진 행렬을 만들기 위해서 tf.ones_like() 함수는 입력 텐서와 동일한 모양(shape) 및 데이터 타입(dtype)을 갖는 모든 요소가 1로 채워진 텐서를 생성한다. 0또한 마찬가지이다.

1으로 이루어진 행렬과 0으로 이루어진 행렬 각각이 정답 라벨 역할을 해주는 것이다.

따라서 진짜 이미지는 진짜 이미지도 예측되도록 되고, 가짜 이미지는 가짜 이미지로 예측되도록 학습된다.

결과적으로 discriminator 의 총 loss = 진짜를 진짜라고 판단하는 loss + 가짜를 가짜라고 판단하는 loss 가 된다.

Generator 의 loss function

Generaotr 는 fake_output이 이 진짜라고 판단될 수 있도록 학습되어야한다.

따라서 생성자의 손실함수는 감별자를 얼마나 잘 속였는지에 대해 수치화를 한다. 직관적으로 생성자가 원활히 수행되고 있다면, 감별자는 가짜 이미지를 진짜 (또는 1)로 분류를 할 것입니다. 여기서 우리는 생성된 이미지에 대한 감별자의 결정을 1로 이루어진 행렬과 비교를 할 것이다.

def generator_loss(fake_output):

loss = cross_entropy(tf.ones_like(fake_output), fake_output)

return loss

Optimizer 적용

감별자와 생성자는 따로 훈련되기 때문에, 감별자와 생성자의 옵티마이저는 다르다.

generator_optimizer = tf.keras.optimizers.Adam(1e-4)

discriminator_optimizer = tf.keras.optimizers.Adam(1e-4)

Training loop

훈련 루프는 생성자가 입력으로 랜덤시드를 받는 것으로부터 시작된다. 그 시드값을 사용하여 이미지를 생성한다. 감별자를 사용하여 (훈련 세트에서 갖고온) 진짜 이미지와 (생성자가 생성해낸) 가짜이미지를 분류한다. 각 모델의 손실을 계산하고, 그래디언트 (gradients)를 사용해 생성자와 감별자를 업데이트한다.

EPOCHS = 50

noise_dim = 100

num_examples_to_generate = 16

seed = tf.random.normal([num_examples_to_generate, noise_dim])@tf.function

def train_step(images):

noise = tf.random.normal([BATCH_SIZE, noise_dim])

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

generated_images = generator(noise, training=True)

real_output = discriminator(images, training=True)

fake_output = discriminator(generated_images, training=True)

gen_loss = generator_loss(fake_output)

disc_loss = discriminator_loss(real_output, fake_output)

gradients_of_generator = gen_tape.gradient(gen_loss, generator.trainable_variables)

gradients_of_discriminator = disc_tape.gradient(disc_loss, discriminator.trainable_variables)

generator_optimizer.apply_gradients(zip(gradients_of_generator, generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(gradients_of_discriminator, discriminator.trainable_variables))def train(dataset, epochs):

for epoch in range(epochs):

start = time.time()

for image_batch in dataset:

train_step(image_batch)

print ('Time for epoch {} is {} sec'.format(epoch + 1, time.time()-start))

📌@tf.function

💬GPT

데코레이터는 TensorFlow에서 성능을 향상시키기 위해 사용되는 기능 중 하나입니다. 이 데코레이터를 함수에 적용하면 TensorFlow에서 함수를 그래프로 변환하여 실행 속도를 높일 수 있습니다. 이러한 변환 과정을 통해 TensorFlow는 그래프 실행 최적화 기법을 사용하여 연산을 효율적으로 수행할 수 있습니다.

train_step 함수에 @tf.function 데코레이터를 적용하면 TensorFlow는 이 함수를 그래프로 변환하여 최적화합니다. 그 결과, train_step 함수가 호출될 때마다 그래프가 다시 생성되는 것이 아니라, 한 번 생성된 그래프가 재사용됩니다. 이는 반복적으로 호출되는 함수의 실행 속도를 향상시키는 데 도움이 됩니다.

따라서 @tf.function 데코레이터를 사용하면 TensorFlow 코드의 실행 속도를 향상시킬 수 있으며, 특히 반복적으로 호출되는 함수에 대해 이점을 얻을 수 있습니다.

Do train

train(train_dataset, EPOCHS)

Final images

predictions = generator(seed, training=False)

fig = plt.figure(figsize=(4,4))

for i in range(predictions.shape[0]):

plt.subplot(4, 4, i+1)

plt.imshow(predictions[i, :, :, 0] * 127.5 + 127.5, cmap='gray')

plt.axis('off')

plt.savefig('image.png')

plt.show()

'CS > 인공지능' 카테고리의 다른 글

| SVM 을 활용한 스팸 분류기 ( Spam Classification via SVM ) (1) | 2024.10.17 |

|---|---|

| 나이브 베이즈를 사용한 스팸 메일 분류기 (Spam Classification via Naïve Bayes) (3) | 2024.10.17 |

| [Tensorflow keras] image generation using Stable Diffusion (0) | 2024.06.24 |

| Simple Diffusion Image generate Model (0) | 2024.06.21 |

| Generative Adversarial Network (1) | 2024.05.28 |